Why is trust in AI decreasing even as the technology of AI advances?

A peer-reviewed study in the June 2024 Journal of Hospitality Marketing and Management the trust factor and #AI has garnered headline articles in CNN and other media outlets. Entitled ‘Adverse impacts of revealing the presence of “Artificial Intelligence (AI)” technology in product and service descriptions on purchase intentions: the mediating role of emotional trust and the moderating role of perceived risk’ (Cicek, Gursoy, & Lu, 2024) the research captures the fear and anxiety involving AI made manifest in the marketplace and consumer choices.

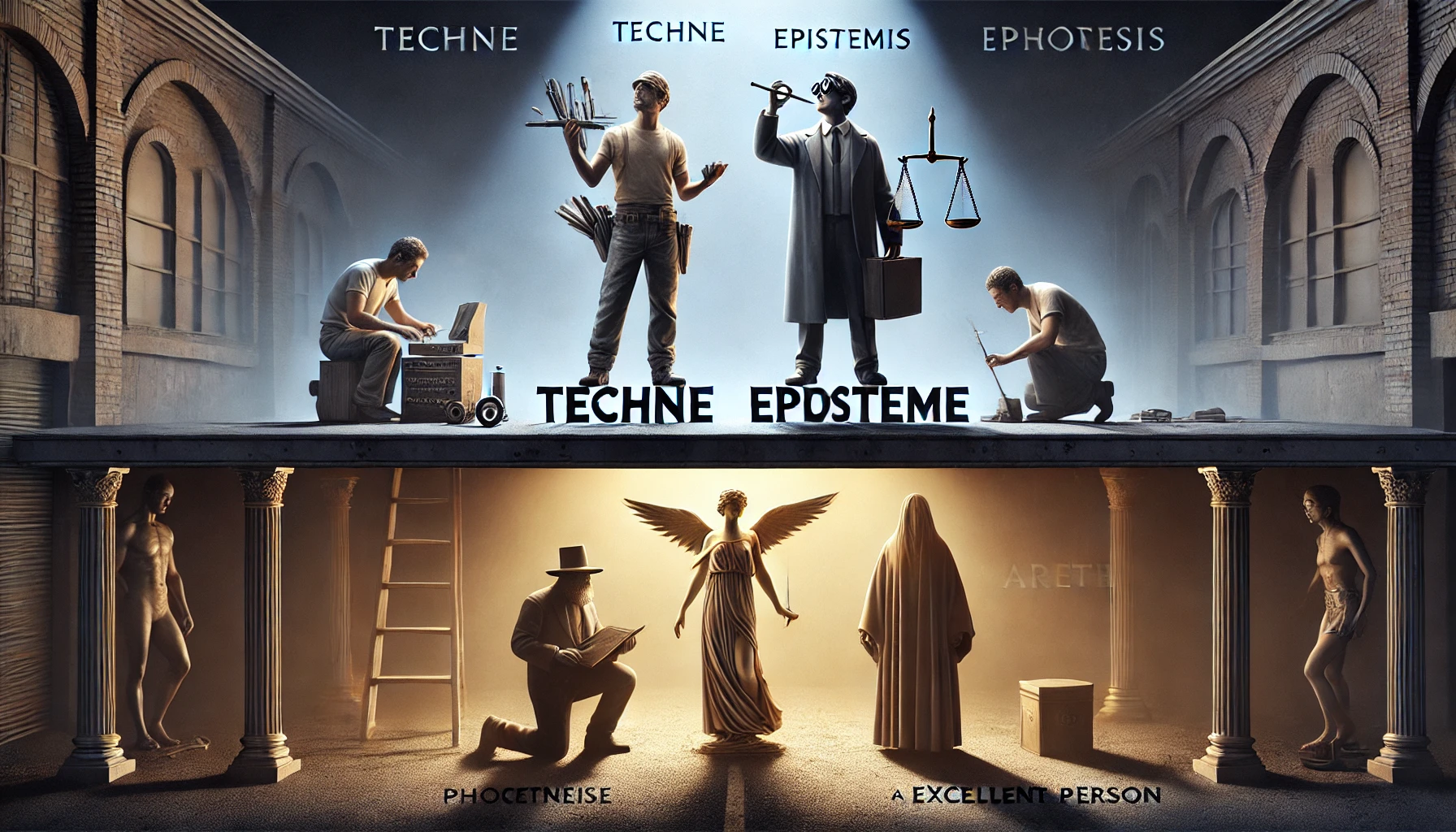

In many ways the consumers’ lack of trust in products labeled as “AI” is predictable as argued in “Shifting from Trusted AI to Constructing Trustworthy AI” and Taking Steps to Constructing Trustworthy AI. The mistrust stems from the tendency of AI and other technologies to privilege certain types of knowledge, specifically technical craft knowledge (techne) and scientific principles (episteme), over tacit, embodied types of knowledge humans employ in their day-to-day lives. The privileging of scientitic and technical expertise creates a disconnect between AI’s power, the technologists who develop it, and its marketplace promise based on whether people trust or mistrust it..

Dunning-Kruger knowledge-type effect

Technologists, moreover, often confuse a technology’s power with its market potential. Their focus on techne and episteme creates a knowledge-type variation of the Dunning-Kruger effect, i.e., Dunning-Kruger knowledge-type effect. The original Dunning-Kruger effect is an increasingly well-known cognitive bias that causes people to overestimate their abilities or underestimate the abilities of others. It occurs when someone lacks knowledge or skill in a particular area, yet they perceive a concept as simple because of their own knowledge is limited. This leads them to believe they are smarter than they actually are. As a result, they’re less inclined to explore the concept further.

In the Dunning-Kruger knowledge-type effect, for example, technologists’ high expertise in technical and scientific types of knowledge and low knowledge involving the other types of knowledge (practical wisdom, phronesis; cunning intelligence, metis; and aspirational striving, arete, etc.) needed to construct market adoption, for example, leads technologists to believe they do not need to understand these other types of knowledge, nor consider the knowledge of people who possess these other types of knowledge.

The Dunning-Kruger knowledge-type effect creates a bias. The bias questions the legitimacy and value of other types of knowledge. This may especially be true in high-stakes technical settings where episteme and techne are essential to the products driving the organiztion.

And yet, individuals and organizations want to succeed. So why don’t individuals in organizations prioritise the most relevant types of knowledge to achieve their goals?

AI mistrust: Power and Privileging

The answer lies in power and human nature. The instinct to maintain power is a deep and ancient root in human social structures. This ancient genetic factor often overshadows the more recently evolved capacity to envision promises about a future. Consequently, immediate power based on position, title, etc. takes precedence over future potential and individuals in organizations privilege certain types of knowledge. This dynamic explains why a pure meritocracy, based on the full spectrum of knowledge possessed by individuals and groups, is never the organizing structure of an organization.

In fact, managing the tension between the selfishness of individual power and the more altruistic nature of promise for the wider group is arguably the core challenge facing most companies. Moreover, while traditional hierarchies may appear outdated in Silicon Valley’s startup culture, yet the power structures within these organizations express a change in form, not in their intrinsic nature. The power-effects privileging techne and episteme remains firmly in place.

The technologists who have expertise in those types of knowledge tie it to power as an inseperable duality. To paraphrase Foucault, “It is not possible for power to be exercised without knowledge, it is impossible for knowledge not to engender power,” (Foucault, 1980).

Other types of knowledge, such as action-based cunning intelligence used in sales and politcal manuevering (metis), ethical decision-making and practical wisdom used in navigating social realities (phronesis), and the aspirational logic of humanity’s incessant strivings, visions, and goals towards excellence (arete) have not disappeared but instead are pushed further off stage.

In the case of consumers, the lack trust in AI is not merely because they don’t trust technologists and Silicon Valley tech firms. Nor is it solely becasue they lack an understanding of what AI is or the positive and negative things AI can do.

It is because consumers recognize the immense power of AI. They sense AI will impact their lives, but also that its is built on only a subset of the types of knowledge they value. Moreover, the technologists employing the subset of knowledge insist that they can be trusted without demonstrating trustworthiness which requires the use of the types of knowledge valued by consumers.

As noted earlier, the privileging of technical and scientific knowledge is pervasive among technologists. Indeed, these types of knowledge are embedded in the fundamental structuring of AI as a technology. Because the dispositions of technologists are deeply informed by these types of knowledge and the basis of AI is formed by these types of knowledge, the Dunning-Kruger knowledge-type effect is often invisible to those inside these companies.

The bias towards these types of knowledge is doxa, taken for granted, just the way things are, and goes unnoticed. However, this is not true for consumers or others outside the AI ecosystem. The study makes clear that anxiety over AI is evident and has noticeable impacts on the marketplace.

And yet, at events and conferences, technologists continue to claim the entire road involving the path to the future of AI. The deeply woven relationship between techne and episteme types of knowledge and power is at the bedrock of Silicon Valley culture, whether a company is located there or transposed to somewhere else in the world. Building the path to trust in AI will involve constructing trustworthy AI. It will include raising the profile of other types of knowledge consumers value and wedding an understanding of these other types of knowledge (phronesis, metis, arete) into the fabric of AI.

References

Cicek, M., Gursoy, D., & Lu, L. (2024). Adverse impacts of revealing the presence of “Artificial Intelligence (AI)” technology in product and service descriptions on purchase intentions: the mediating role of emotional trust and the moderating role of perceived risk. Journal of Hospitality Marketing & Management, 1–23. https://doi.org/10.1080/19368623.2024.2368040

Foucault, Michel. 1980. Power/Knowledge: Selected Interviews and Other Writings, 1972–1977. Translated by Colin Gordon, Leo Marshall, John Mepham, and Kate Soper. New York: Pantheon.